Replication & Public Perception

Contents

This month a study came out that tried to estimate how good social-science-research can be replicated. Camerer et al. (2018) took twenty-one studies from Nature and Science and tried to replicate them. Thirteen replications were successful, meaning that a significant effect in the same direction as in the original study could be found. I looked at the Altmetrics of these studies, to see how they fared in the public perception. Altmetrics measure the distribution of a scientific work to the general public by looking at how often it is posted on Facebook, tweeted, picked up by media-outlets, etc. I have layed them out in the graph below:

_plot.png)

When we go along the graph from left to right, we can assume that the farther right we get, the more general the audience becomes, as the paper is not shared in a small community of specialists, but by many members of the interested public. And we see that most studies that were successfully replicated by this measure quickly drop out. This would mean that the picture that the general public has of the results of the social sciences is deeply flawed, as the public seems to be generally more exposed to the results that we would now consider to be less certain.

To put it differently: If Camerer et al.s work succeeded in choosing representative studies, and if the scores I got from Altmetric are reliable, than if a friend comes to me and tells me about any social-science study that he read about, I am usually justified in offering him a bet that the result he talks about will not replicate. This holds as long as this friend of mine is not a specialist in the field with access to papers that did not enjoy high popularity: Let’s say he is so far from the field that he hears only about studies above 1200 mentions. Then our odds are even. But when we move along the graph from right to left, they become first 2:1 in my favor (at 800), then go up to 4:1 (at 500) and then stay better than his until arround 100 mentions, when rest of the replicated studies make their appearance.

Ths is a bit disturbing, as it suggests that from the point of view of the public that never heard of most of the studies, the ratio of replicatable results that Camerer et al. report (57% - 67% can be replicated, depending on what measure we choose), is far worse.

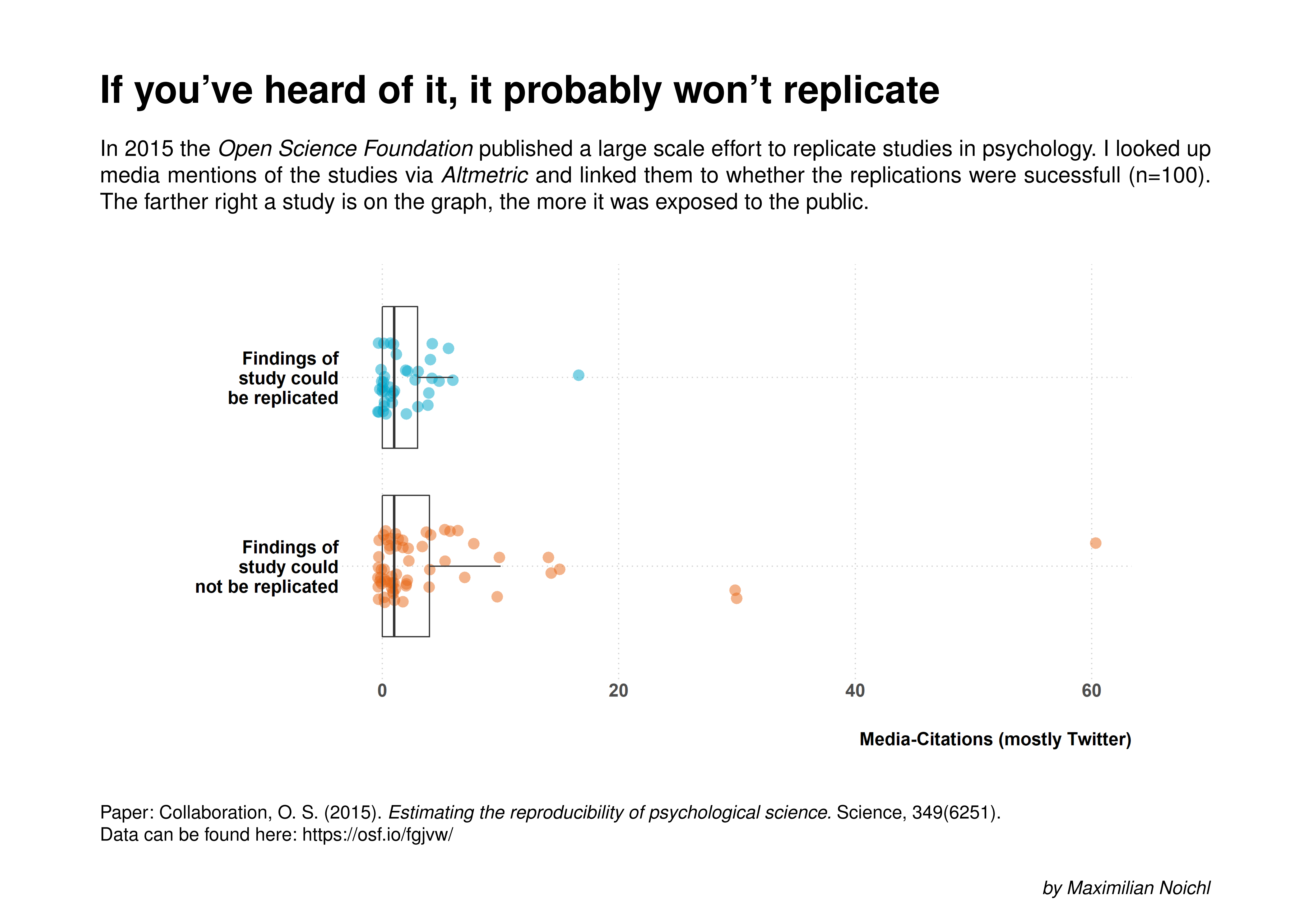

To see how widely this effect is spread I took data from the large replication attempts of the Open-Science-Foundation (2015) and looked up their altmetric scores

As we can see here again, three studies that could not be replicated were more popular than the first one that could, and eleven more rank before the second successful replication. We must note here that these studies generally were less popularized then those treated by Camerer et al. by an order of magnitude. I am not sure what to make of that, as it seems to suggest that the effect persists into the research communities.

I can think of various reasons that might contribute to an explanation for this effect. On part of the public and the news media, I can think of some sort of novelty-bias and tendency to share politically interesting findings, or more generally speaking, findings that confirm a certain ideological leaning. But I suppose there must be some part that researchers play in this, or we would not find that effect on the small scale.

If you want to see how I got this numbers, you can look up the code on GitHub. Please tell me below if you have ideas on how to make it better, or if you have explanations or further considerations.

Literature

Camerer, C. F., Dreber, A., Holzmeister, F., Ho, T.-H., Huber, J., Johannesson, M., … Wu, H. (2018). Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nature Human Behaviour, 2(9), 637–644. https://doi.org/10.1038/s41562-018-0399-z

Collaboration, O. S. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716. https://doi.org/10.1126/science.aac4716

Author Maximilian Noichl

LastMod 2018-09-08