| Franz J. Vesely > CompPhys Tutorial > Stochastics |

3.2 Other DistributionsSubsections

3.2.1 Transformation of probability densitiesGiven $p(x)$ and a bijective mapping $y=f(x); \; x=f^{-1}(y)$; then

$

\vert p(y) dy \vert = \vert p(x)dx \vert

$

or

$

p(y) = p(x) {\textstyle |} \frac{\textstyle dx}{\textstyle dy} |

= p[f^{-1}(y)] \; | \frac{\textstyle df^{-1}(y)}{\textstyle dy} |

$

This relation holds for any kind of density. EXAMPLE: The spectral density of black body radiation is usually written in terms of the angular frequency $\omega$:

$

I(\omega) = \frac{\textstyle \hbar \omega^{3}}{\textstyle \pi c^{3}}

\frac{\textstyle 1}{\textstyle e^{\textstyle \hbar \omega/kT}-1}

$

If we prefer to give the spectral density in terms of the wave length $\lambda \equiv 2 \pi c / \omega$, we have

$

I(\lambda) = I[\omega(\lambda)] \; | \frac{\textstyle d \omega}{\textstyle d \lambda} |

= \frac{\textstyle \hbar}{\textstyle \pi c^{3}}

\left( \frac{\textstyle 2\pi c}{\textstyle \lambda}

\right)^{3} \frac{\textstyle 1}{\textstyle e^{(hc/\lambda)/kT}-1}

\left( \frac{\textstyle 2 \pi c}{\textstyle \lambda^{2}}\right)

$

3.2.2 Transformation MethodGiven a probability density $p(x)$:Find a bijective mapping $y=f(x)$ such that the distribution of $y$ is $p(y)=c $:

$

p(x) = c | \frac{\textstyle dy}{\textstyle dx} | = c

| \frac{\textstyle df(x)}{\textstyle dx} |

\;\;\;{\rm or} \;\;\;

| \frac{\textstyle df(x)}{\textstyle dx} | = \frac{\textstyle 1}{\textstyle c} p(x)

$

It is easy to see that the mapping

$

\begin{eqnarray}

f(x) &=& P(x) \equiv \int \limits_{\textstyle a}^{\textstyle x} p(x') dx'

\end{eqnarray}

$

fulfills this condition, with $c=1$.

EXAMPLE: Let

$

p(x) = \frac{\textstyle 1}{\textstyle\pi} \frac{\textstyle 1}{\textstyle 1+x^{2}}

\;\;\;{\rm (Lorentzian),} \;\;\;\;

x \epsilon (\pm \infty)

$

Then $y = P (x)=1/2+(1/\pi) \arctan x$, with the inverse $P^{-1} (y)=\tan[\pi(y-1/2)]$. Therefore:

Geometrical interpretation: $y$ is sampled from an equidistribution $\in (0,1)$ and $x=P^{-1}(y)$. $\Longrightarrow$ The regions where $P(x)$ is steeper (i.e. $p(x)$ is large) are hit more frequently. EXERCISE: (See also here) (a) Warm-up (no coding): A powder of approximately spherical metallic grains is used for sintering. The diameters of the grains obey a normal distribution with $\langle d \rangle =2 \mu m$ and $\sigma=0.25 \mu m$. Calculate the distribution of the grain volumes. (b) Lorentzian density: Write a codelet which produces random variates with probability density

$

p(x) = \frac{\textstyle 1}{\textstyle\pi} \frac{\textstyle 1}{\textstyle 1+x^{2}}

\;\;\;{\rm (Lorentzian),} \;\;\;\;

x \epsilon (\pm \infty)

$

using the transformation method. Plot a frequency histogram of the produced random numbers and compare it with the given $p(x)$. Also, plot the distribution function $P(x)$ pertaining to the given density. 3.2.3 Generalized Transformation Method:Same as before, but with n-tuples of random variates:Let $x=(x_{1}, \dots ,x_{n})$, $x \epsilon D_{x}$, and $y= f(x)$ with $ y \epsilon D_{y}$. Then

$

p(y) = p(x) | \frac{\textstyle \partial x }{\textstyle \partial y } |

$

($|\partial x/ \partial y|$ ... Jacobi determinant of the transformation $x= f^{-1}(y)$.) The following procedure for the production of Gaussian random variates may be understood as an application of this. $\Longrightarrow$ Normal distribution: 3.1

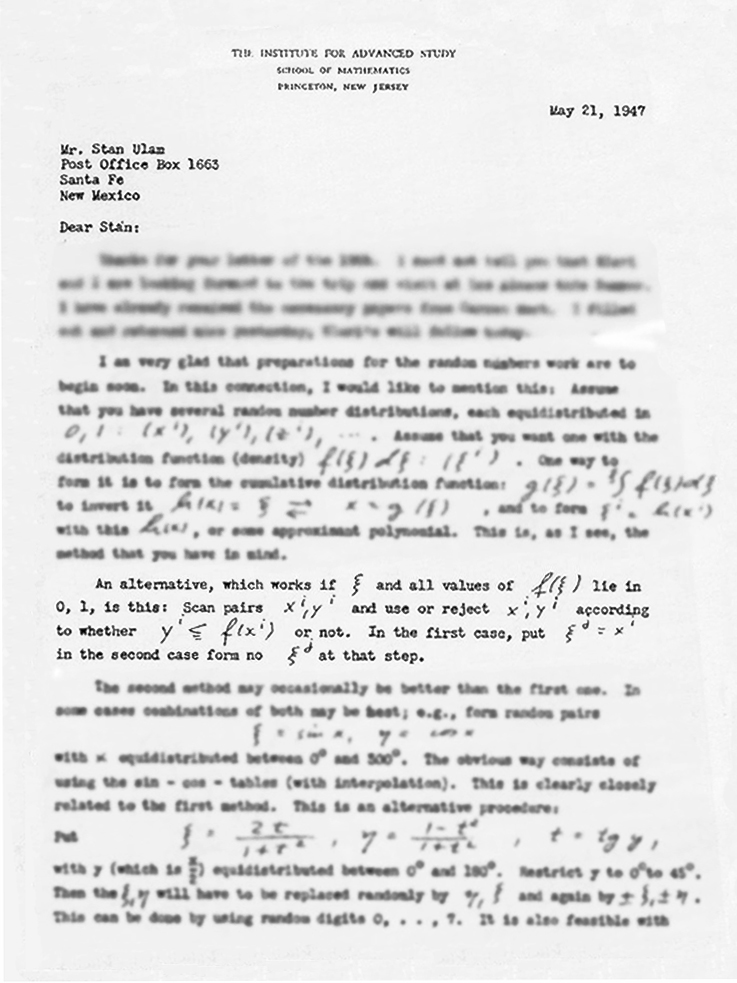

Footnote 3.1: 3.2.4 Rejection MethodA classic: created by John von Neumann, applicable to almost any $p(x)$.Here is the original formulation:

And this is how we read it today:

The method is simple and fast, but it becomes inefficient whenever the area of the rectangle $[a,b] \otimes [0,p_{m}]$ is large compared to the area below the graph of $p(x)$ (which is, of course, equal to $1$.) In that case the "Improved Rejection Method" may be applicable: $\Longrightarrow$

3.2.5 Multivariate Gaussian Distribution

$

p(x_{1}, \dots, x_{n}) = \frac{1}{\sqrt{\textstyle (2 \pi)^{n} S}}

e^{\textstyle -\frac{1}{2} \sum \sum g_{ij} x_{i} x_{j}}

$

or

$

\begin{eqnarray}

p(x) & = & \frac{\textstyle1}{\textstyle\sqrt{(2 \pi)^{n} S}}

e^{\textstyle -\frac{1}{2} x^{T} \cdot {G} \cdot x}

\equiv \frac{1}{\sqrt{\textstyle (2 \pi)^{n}S}}

e^{\textstyle -\frac{1}{2}Q}

\end{eqnarray}

$

with the covariance matrix of the $x_{i}$

$

\begin{eqnarray}

S & \equiv & G^{-1} \equiv

\left( \begin{array}{ccc}

\langle x_{1}^{2} \rangle & \langle x_{1}x_{2} \rangle & \dots \\

\vdots & \langle x_{2}^{2} \rangle & \dots \\

& & \ddots \\

\end{array}\right)

\end{eqnarray}

$

$S \equiv | S|$ is the determinant of this matrix. $S$ and $G$ are symmetric, their eigenvalues are called $\sigma_{i}^{2}$ and $\gamma_{i}$ (sorry!). EXAMPLE: Assume that two Gaussian variates have the variances $s_{11} \equiv \langle x_{1}^{2} \rangle =3$, $s_{22} \equiv \langle x_{2}^{2} \rangle =4$, and the covariance $s_{12} \equiv \langle x_{1}x_{2} \rangle =2$:

$

S = \left( \begin{array}{cc}3 & 2\\ \\2 & 4 \end{array} \right) ; \; \;

G \equiv S^{-1} =

\left( \begin{array}{cc} \frac{1}{2} & -\frac{1}{4} \\

\\ -\frac{1}{4} & \frac{3}{8}\end{array} \right)

$

The quadratic form $Q$ in the exponent is then $Q=(1/2) x_{1}^{2} - (1/2)x_{1}x_{2} + (3/8)x_{2}^{2} $, and the lines of equal density (that is, of equal $Q$) are ellipses which are inclined with respect to the $x_{1,2}$ coordinate axes: Rotate the axes of the ellipsoids $Q=const$ to coincide with the coordinate axes: $\Longrightarrow$ cross correlations vanish! Principal axis transformation:

Having found $T$, we arrive at the following prescription for the production of correlated Gaussian variables: $\Longrightarrow$

Let's try it out... EXAMPLE: Once more, let

$

S=\left( \begin{array}{cc}3 & 2\\ \\2 & 4\end{array} \right) ,

\;\; {\rm with\; the\; inverse}\;\;

G=\left( \begin{array}{cc}\frac{1}{2}& - \frac{1}{4}\\ \\-\frac{1}{4} & \frac{3}{8}\end{array} \right)

$

Principal axis transformation: The eigenvalues of $S$ are $\sigma_{1,2}^{2}= (7\pm\sqrt{17})/2 = 5.562|1.438$, and the corresponding eigenvectors are

$

s_{1}=\left( \begin{array}{r} 0.615 \\ \\0.788 \end{array} \right) \;\;\;\;\;

s_{2}=\left( \begin{array}{r} 0.788 \\ \\-0.615 \end{array} \right) \;\;\;\;\;

{\rm Thus}\;\;\;\;\;

T=\left( \begin{array}{cc}0.615 & 0.788\\ \\0.788 & -0.615\end{array} \right)

$

Generator: To produce pairs $(x_{1},x_{2})$ of Gaussian random numbers with the given covariance matrix:

EXERCISE: (See also here) Write a program that generates a sequence of bivariate Gaussian random numbers with the statistical properties as assumed in the foregoing example. Determine $\langle x_{1}^{2}\rangle$, $\langle x_{2}^{2}\rangle$, and $\langle x_{1}x_{2}\rangle$ to see if they indeed approach the given values of $3$, $4$, and $2$. 3.2.6 Homogeneous distributions in Orientation Space

Fig. 3.7: Simple as can be...

Fig. 3.8: More refined: Marsaglia's recipe (Generalization to hyperspherical surfaces: see Vesely, Comp. Phys..) vesely 2005-10-10

|