Next: 2.3.3 Gauss-Seidel Relaxation (GSR)

Up: 2.3 Iterative Methods

Previous: 2.3.1 Iterative Improvement

2.3.2 Jacobi Relaxation

Divide the given matrix according to

where

where

contains only the diagonal elements of

contains only the diagonal elements of

, while

, while

and

and

are the left and right parts of

are the left and right parts of

, respectively.

, respectively.

Choose

and write the iteration formula as

and write the iteration formula as

or

EXAMPLE:

In

let

let

Starting from the estimated solution

and using the diagonal part of

,

,

in the iteration we find the increasingly more accurate solutions

Convergence rate:

Writing the Jacobi scheme in the form

with the Jacobi block matrix

convergence requires that all eigenvalues of

be smaller than one

(by absolute value). Denoting the largest eigenvalue (the

spectral radius) of

be smaller than one

(by absolute value). Denoting the largest eigenvalue (the

spectral radius) of

by

by  , we have for the asymptotic rate of convergence

, we have for the asymptotic rate of convergence

In the above example

and

and

.

.

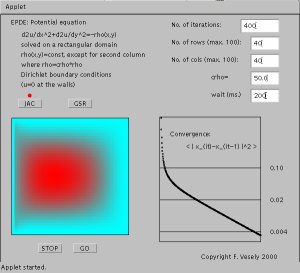

The electrostatic problem shown before was treated by Jacobi

iteration:

Next: 2.3.3 Gauss-Seidel Relaxation (GSR)

Up: 2.3 Iterative Methods

Previous: 2.3.1 Iterative Improvement

Franz J. Vesely Oct 2005

See also: "Computational Physics - An Introduction," Kluwer-Plenum 2001